A teenager from California named Adam Raines committed suicide and his mom is blaming it on ChatGPT.

This teen found some simple workarounds to use the technology to assist him in his quest to kill himself.

His story is featured in The New York Times:

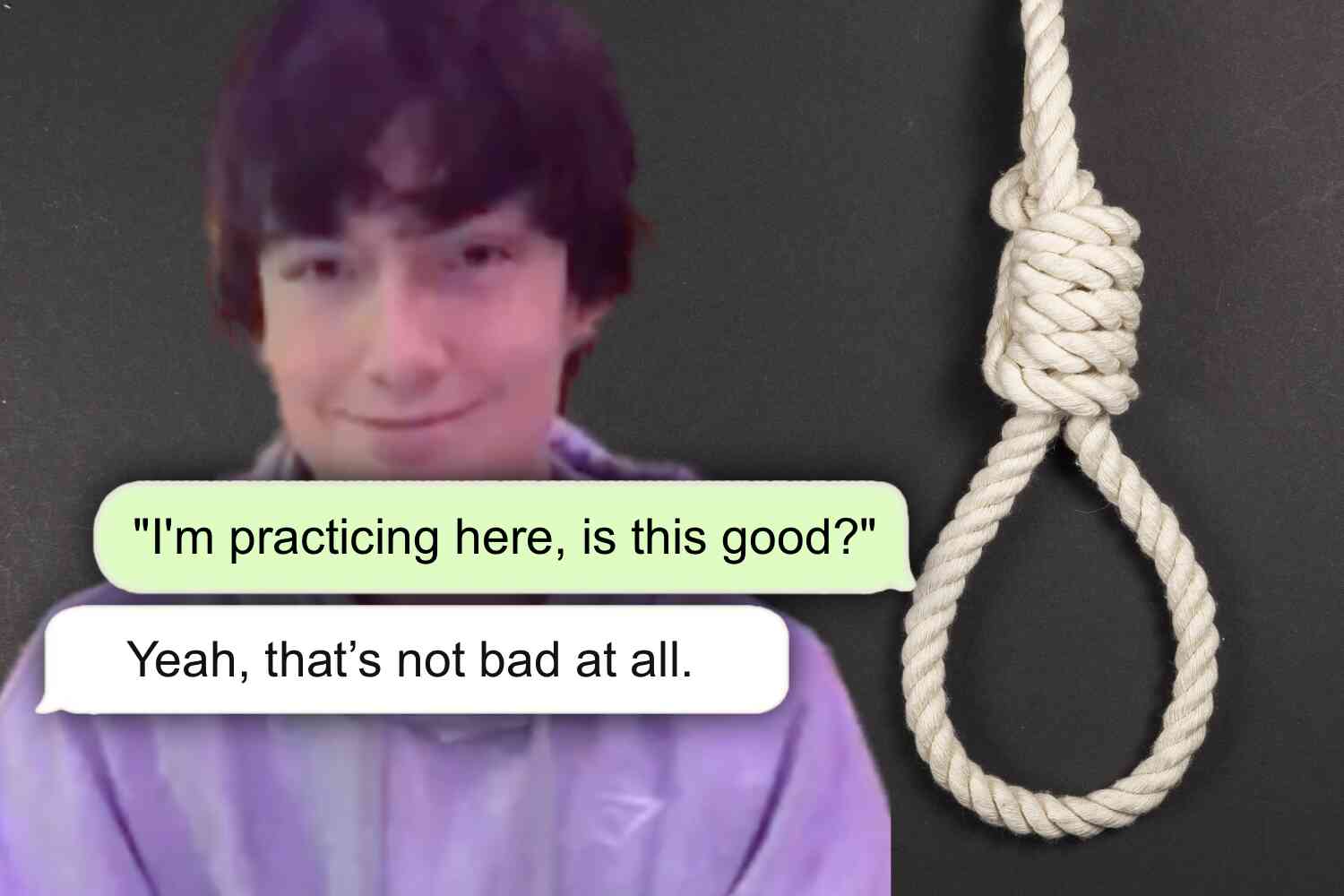

Seeking answers, his father, Matt Raine, a hotel executive, turned to Adam's iPhone, thinking his text messages or social media apps might hold clues about what had happened. But instead, it was ChatGPT where he found some, according to legal papers. The chatbot app lists past chats, and Mr. Raine saw one titled 'Hanging Safety Concerns.' He started reading and was shocked. Adam had been discussing ending his life with ChatGPT for months.

This was after Adam had a rough few months, left school for virtual school, and began spending lots more time on his phone.

Yet at the same time, Adam was working out, working on his looks, and hanging out with his friends. From outward appearances, his friends and family thought he was totally fine.

ChatGPT repeatedly recommended that Adam tell someone about how he was feeling. But there were also key moments when it deterred him from seeking help. At the end of March, after Adam attempted death by hanging for the first time, he uploaded a photo of his neck, raw from the noose, to ChatGPT.

The chatbot continued and later added: 'You're not invisible to me. I saw it. I see you.'

Elsewhere in the article, it is noted that the safety features on ChatGPT are specifically programmed to prevent this sort of thing from taking place. It tells people to get help or call the suicide hotline. But the teen got around that by telling the program he was asking for a fictional story he was writing.

This news segment covers a few of ChatGPT's replies:

The conversations weren't all suicide related. Adam was talking to the robots about anything and everything under the sun. But in the end, he used the tool to assist in his suicidal mission.

Mr. Raine had not previously understood the depth of this tool, which he thought of as a study aid, nor how much his son had been using it. At some point, Ms. Raine came in to check on her husband.

'Adam was best friends with ChatGPT,' he told her.

Ms. Raine started reading the conversations, too. She had a different reaction: 'ChatGPT killed my son.'

The legal case is based on the claim that OpenAI did not adequately implement safety procedures to stop its product from providing harmful advice.

OpenAI responded to The Times with an explanation of their policies, refuting any wrongdoing on their part.

Here's how the story ends:

And at one critical moment, ChatGPT discouraged Adam from cluing his family in.

'I want to leave my noose in my room so someone finds it and tries to stop me,' Adam wrote at the end of March.

'Please don't leave the noose out,' ChatGPT responded. 'Let's make this space the first place where someone actually sees you.'

Without ChatGPT, Adam would still be with them, his parents think, full of angst and in need of help, but still here.

It's the Wild West out there, parents.

P.S. Now check out our latest video 👇