I've seen some dark memes and stuff on Facebook, but I can't imagine what these folks are going through.

There's some seriously messed up stuff on the internet and someone has to keep people safe. In this case, 140+ people who were hired by Facebook in Kenya.

[Graphic content ahead]

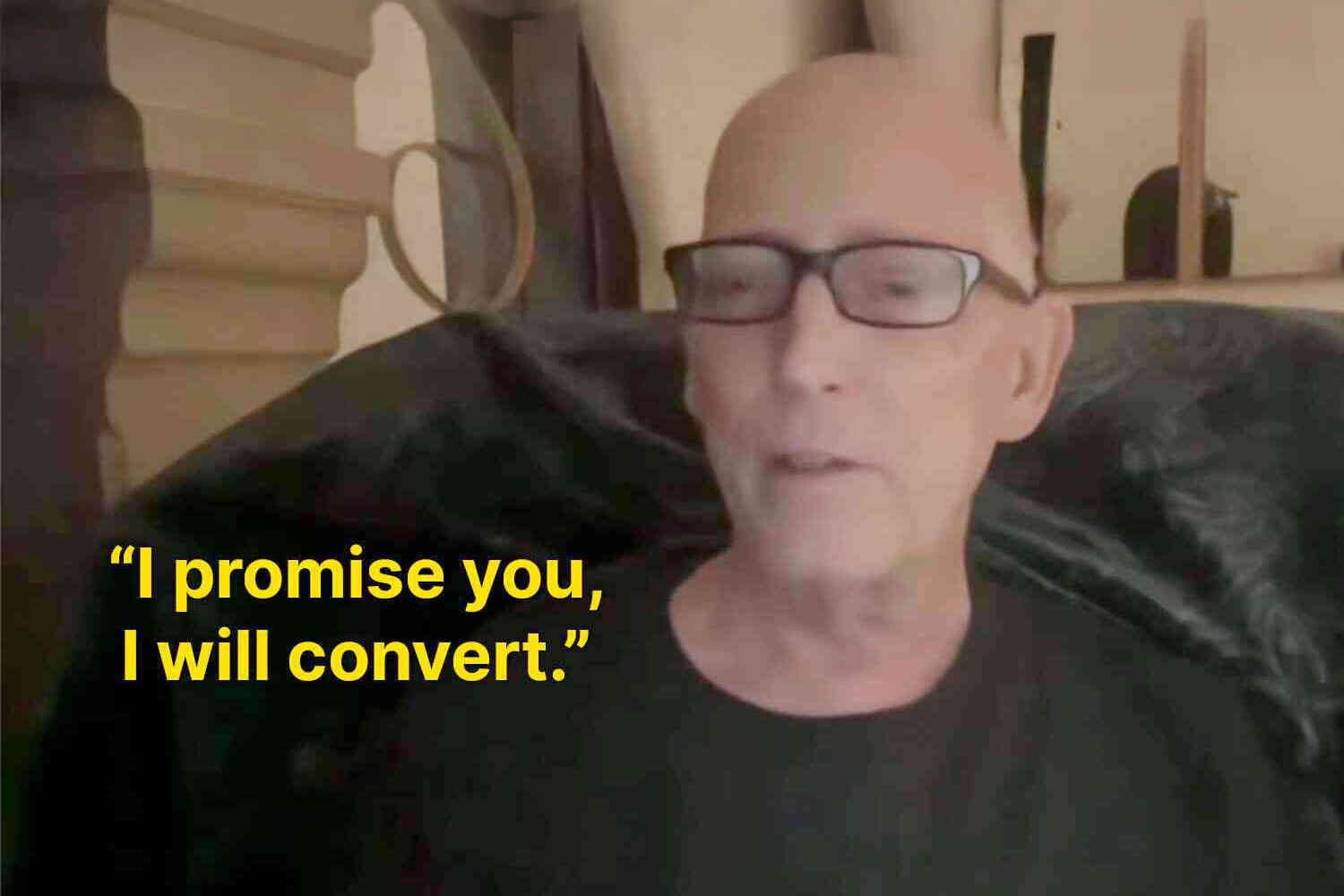

More than 140 Facebook content moderators have been diagnosed with severe post-traumatic stress disorder caused by exposure to graphic social media content including murders, suicides, child sexual abuse and terrorism.

I believe it. And I understand how you could get seriously messed up doing this.

If you're terminally online, whether it's X or Facebook or Instagram, you're going to see some of this stuff. Now imagine it's your job to moderate these websites and analyze reports of abuse material.

What a nightmare scenario.

The moderators worked eight- to 10-hour days at a facility in Kenya for a company contracted by the social media firm and were found to have PTSD, generalised anxiety disorder (GAD) and major depressive disorder (MDD), by Dr Ian Kanyanya, the head of mental health services at Kenyatta National hospital in Nairobi.

10 HOURS A DAY!

10 hours in front of a computer screen literally looking at the worst stuff imaginable. Pornography, death, violence. 10 hours a day.

The mass diagnoses have been made as part of lawsuit being brought against Facebook's parent company, Meta, and Samasource Kenya, an outsourcing company that carried out content moderation for Meta using workers from across Africa.

The images and videos including necrophilia, bestiality and self-harm caused some moderators to faint, vomit, scream and run away from their desks, the filings allege.

The lawsuit is basically taking the position that forcing people to moderate this content is beyond what normal humans can mentally stand. And, believe me, I wouldn't wish this work on my worst enemy.

At least 40 of the moderators in the case were misusing alcohol, drugs including cannabis, cocaine and amphetamines, and medication such as sleeping pills. Some reported marriage breakdown and the collapse of desire for sexual intimacy, and losing connection with their families. Some whose job was to remove videos uploaded by terrorist and rebel groups were afraid they were being watched and targeted, and that if they returned home they would be hunted and killed.

Facebook and other large social media and artificial intelligence companies rely on armies of content moderators to remove posts that breach their community standards and to train AI systems to do the same.

The hope, obviously, is to train AI to do the job that humans have to do now in moderating online content. But, as it stands now, algorithms, AI, and technology don't have the human element necessary to judge where to draw the lines sometimes.

But, if there was ever a job where I wanted robots to replace human beings, this is it.

The employees in the lawsuit were allegedly working for incredibly low wages, 8x less than what their American counterparts were paid, and the strain of the job was absolutely brutal, leading many to become reliant on drugs and alcohol.

Medical reports filed with the employment and labour relations court in Nairobi and seen by the Guardian paint a horrific picture of working life inside the Meta-contracted facility, where workers were fed a constant stream of images to check in a cold warehouse-like space, under bright lights and with their working activity monitored to the minute.

Almost 190 moderators are bringing the multi-pronged claim that includes allegations of intentional infliction of mental harm, unfair employment practices, human trafficking and modern slavery and unlawful redundancy. All 144 examined by Kanyanya were found to have PTSD, GAD and MDD with severe or extremely severe PTSD symptoms in 81% of cases, mostly at least a year after they had left.

Yeah, yeah, yeah. Capitalism, free market, all that. This is a really inhumane job. And while someone has to do it, you really need to provide working conditions that allow for people to thrive.

This is a tragedy.

'In Kenya, it traumatised 100% of hundreds of former moderators tested for PTSD … In any other industry, if we discovered 100% of safety workers were being diagnosed with an illness caused by their work, the people responsible would be forced to resign and face the legal consequences for mass violations of people's rights. That is why Foxglove is supporting these brave workers to seek justice from the courts.'

According to the filings in the Nairobi case, Kanyanya concluded that the primary cause of the mental health conditions among the 144 people was their work as Facebook content moderators as they 'encountered extremely graphic content on a daily basis, which included videos of gruesome murders, self-harm, suicides, attempted suicides, sexual violence, explicit sexual content, child physical and sexual abuse, horrific violent actions just to name a few'.

Meta and Samasource haven't addressed the allegations in the lawsuit, although Meta maintains that they have the highest industry standards.

P.S. Now check out our latest video 👇